How are medical discoveries made? In a previous post (“The Art of Scientific Discovery”), I looked at several models by which science has progressed A sharply focused, systematic pursuit led to the discovery of insulin. An accidental observation produced a drug to treat erectile disfunction. Those, and the other examples of medical advances described there involved more-or-less conventional approaches. But there’s an unconventional approach that appeals to people confronting difficult medical issues. It involves, in one form or another, self-experimentation. It’s an approach that has some adherents and many critics; some advantages but also some obvious drawbacks.

Limb regeneration by self-experimentation

The case of Dr. Curtis “Curt” Connors, who lost his arm while on active duty with the US army, provides a graphic case study of the effects of self-experimentation. He experimented with various approaches for limb regeneration after returning to civilian life. Because reptiles can sometimes regenerate lost limbs, Connors made a serum from reptilian DNA that he thought might cause his lost arm to re-grow. It did, but there were some unfortunate side effects: it turned him into The Lizard, avowed enemy of Peter Parker, otherwise known as Spider Man. That story began back in 1963, and is chronicled in graphic media known as Marvel comics. His case will do little to convince the scientific establishment about the value of self-experimentation, but it does illustrate a certain public fascination with it.

The usual approach

Back in the real world, there’s a mad scramble to develop and distribute a vaccine that will prevent COVID-19, the disease caused by the SARS-CoV-2 coronavirus. Billions are being spent in several types of development, including some that are relatively novel (for example, the use of RNA to transform human cells into producers of anti-viral antibodies). It’s hoped that one or more of these will soon be successful, so that the world can start to get back to normal.

Conventional testing of a new pharmaceutical in humans involves several phases. The first simply determines whether the product is toxic, or has serious side effects for people. (Usually, the test medication has already been found to show efficacy in laboratory experiments or animals.) This phase one testing involves a limited number of volunteers, who are given increasing doses of the new medication. If it passes this test, it is then administered to a larger group of people to see if it actually works, in whole or in part, to treat the condition. It doesn’t have to be perfect, but it does have to be better than what’s available. In the case of COVID-19, there is no vaccine yet, so there’s nothing to compare treatment to, except no treatment.

Complete protection may not be necessary. A vaccine effective at the 70 or 80% level may provide protection to the population. Even a lower level may work for COVID-19, if it is coupled to some forms of social isolation (mask wearing in public, maintaining distancing).

The third stage of conventional testing is carried out on thousands (in the case of potential COVID-19 vaccine, tens of thousands) of people. They are randomly separated into treatment and placebo groups, and the results are analyzed after breaking the code to see whether the treatment group got fewer, or less serious, infections. This is expensive and time-consuming, although it is going on at “warp speed”, according to the US government.

DIY vaccines against COVID-19

Another approach to anti-coronavirus vaccine development is the “Do It Yourself” approach. A recent article in the New York Times (September 1, 2020) described the work of a man who runs a biotech company in Seattle, who offers a vaccine he developed for $400 a pop. So far, 30 people have availed themselves of it. The vaccine has not undergone conventional testing, nor does there appear to be evidence that it works.

It’s not clear what good can possibly come from this adventure. It’s not going to determine the effectiveness of this DIY vaccine. There have been 80,000 cases of COVID-19 in Washington State as of early September 2020. With a population of 7.6 million, that amounts to one case for every 43 people. The rate of infection currently is lower than it was at the peak, so looking for a result (no infection) in a group of 30 people will not be productive either way — chances are, none of them will be infected anyway, and any result will be statistically meaningless. The Food and Drug Administration has sent a serious letter to the microbiologist who is promoting this vaccine (he runs the company that makes it).

The same New York Times article also described another Do-It-Yourself approach to preventing COVID-19, although that one takes a more scientific approach. It is supported by a large group of serious people, including scientists with impressive credentials. This “Rapid Deployment Vaccine Collaborative” (RaDVaC) uses a synthetic mix of SARS-CoV-2 antigenic peptides, which people then self-administer. The outcome measured is the immune response, which is being thoroughly and systematically assessed. This is an outcome that is directly relevant to anti-COVID-19 immunity, and data from all participants is relevant. The approach has been carefully designed to contain only safe ingredients.

Improving your life

The two DIY approaches to COVID-19 vaccine differ in important ways. The first (the Washington State effort) has not published a record of protocol or preliminary results, only the recommendation of its purveyor, who gets money when it’s used. The second, the RaDVaC enterprise, is based on a defined, published, protocol. It’s hard to see how the Washington State effort can provide useful information, except perhaps if there’s a catastrophe. The RaDVaC effort, however, will provide evidence that is directly relevant (either there is, or is not, an immune response to the virus).

A “lifestyle” DIY effort that has some scientific backing, at least in animals, is calorie restriction. The subject goes back to at least the 16th century, when an Italian diabetic named Luigi Cornaro wrote a treatise on his own efforts to control his health. At age 40, he was suffering from what we recognize as adult-onset diabetes. He undertook to improve his health by reducing his intake of food and drink, bolstered by the belief that temperance was virtuous and would improve his condition. He immediately became much healthier, and at age 83 wrote a book describing his life, “Discourses on the Sober Life” (the Italian version “Discorsi Della Vita Sobria” sounds lovelier). Cornaro wrote three similar treatises, the last at age 95, and died at 102.

In the early 1930s, an American nutritional scientist at Cornell, Clive McCay, read about Cornaro’s experience, and wondered whether calorie restriction, which is what the Italian had engaged in, would extend the lifespan of animals. He used rats, and got a clear result: restricting the food intake extended male rats’ lifespan by 60%. (Earlier studies had produced mixed results, with some calorie-restriction regimens leading to shorter lifespans. This was because the restricted diets starved the animals of essential micronutrients like vitamins. McCay was smarter and more up-to-date in his nutritional information: his restricted diets contained the needed levels of essential micronutrients.) Subsequent work showed that calorie restriction increases the lifespans of yeast, roundworms, spiders, fruit flies, fish and mice, in addition to rats. What about humans?

Understandably, we don’t yet have statistically convincing evidence that restricting calories extends the lifespan of humans. A group called The Calorie Restriction Society, made up of volunteers who eat less than normal (the term of art is “hypoalimentation”), is dedicated to determining whether it does, at least for its adherents. It’s going to take a long time to accumulate enough evidence. However, it’s already known that limiting food intake improves several health measures, such as blood pressure, cholesterol levels, and risk factors for cardiovascular disease and diabetes. But this uncomfortable form of self experimentation has the unfortunate side effect of producing an almost constant craving for food. Even if you don’t live to be 100, it will probably seem like it.

Ulcers are an infectious disease

The question of what causes gastric or duodenal ulcers was settled medical science in 1980: such ulcers are the result of excess stomach acid, which is caused by stress, diet or smoking. Mild cases were treated with Tums or Pepto-Bismol, and more serious ones, a doctor’s prescription for Zantac or Tagamet. The symptoms and damage of ulcers are caused by a breakdown in the lining of the stomach or upper part of the small intestine. A breach in the ‘acid proofing’ of the stomach or intestinal walls can ultimately lead to bleeding or perforation, potentially life-threatening conditions. This understanding is true, but also incomplete. There is another component in the etiology of ulcers, one that was not predicted and whose discovery led to conclusions that initially encountered strenuous resistance from the medical community. And self-experimentation played an important role in its discovery.

Until the early 1990’s the treatment of moderate or mild ulcers focused on two lines of therapy. Excess acid could be neutralized by alkaline medications usually containing bismuth (which is the key ingredient of Pepto-Bismol), magnesium, or calcium salts. And the production of acid could be inhibited with drugs such as Tagamet or Zantac, which block the cellular mechanisms of acid secretion. A number of drug companies built fortunes on these therapies, and they did help, but they didn’t treat the underlying condition, which often returned. Although it was commonly accepted that bland food, a reduction in stimulating beverages such as coffee and alcohol, and cessation of smoking helped to manage ulcers, it was really only the last of these three agents, smoking, that had a provable link to ulcers.

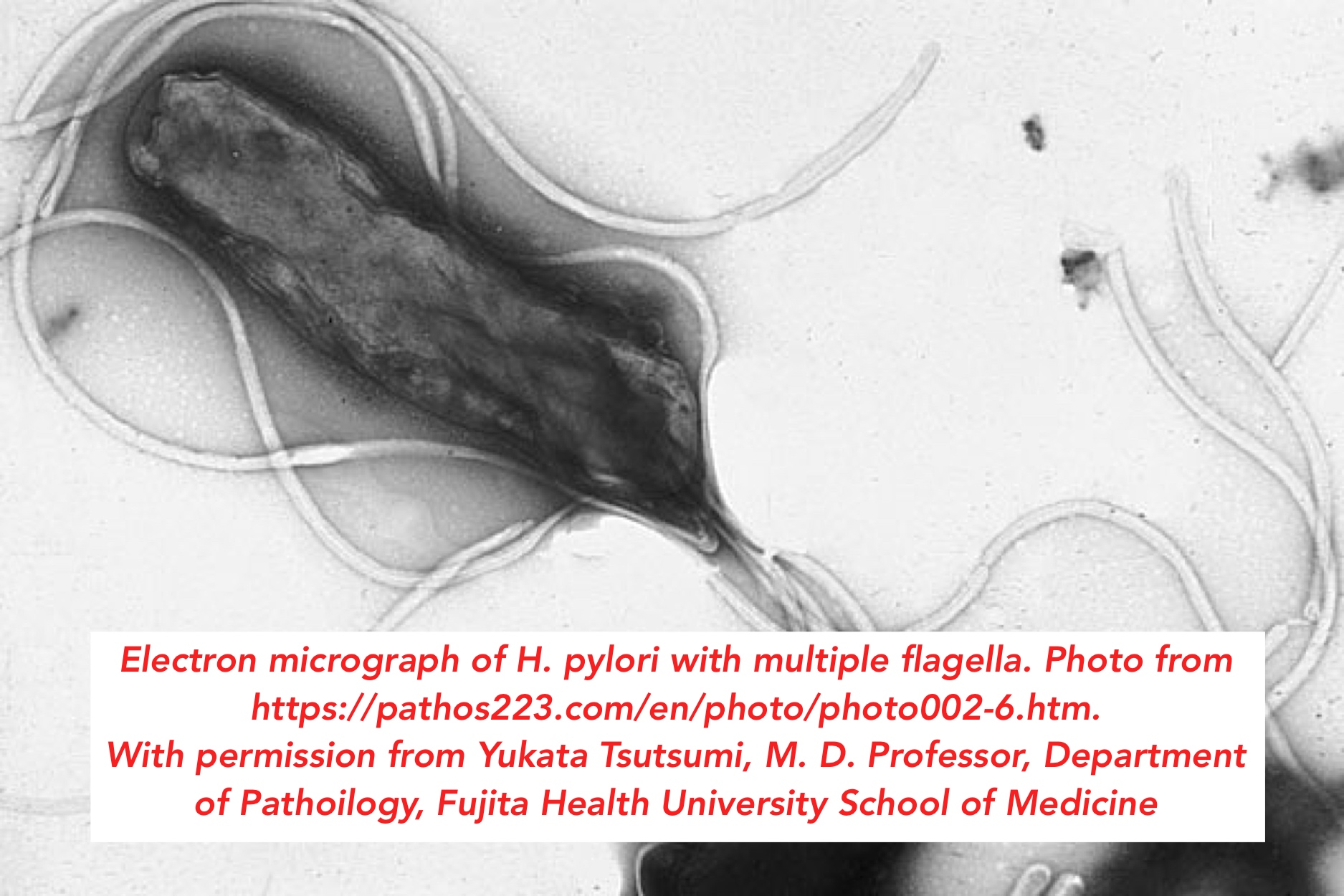

There the matter stood until it tweaked the curiosity of two Australian doctors. Dr. Robin Warren was a pathologist at the Royal Perth Hospital in Western Australia. He was interested in gastritis, which is due to inflammation of the mucous membrane of the stomach, and which is often a precursor to ulcer disease. A young medical trainee, Dr. Barry Marshall, approached him in 1981. Dr. Marshall wanted to incorporate some research into his medical training. Together, the two addressed a curious finding that Dr. Warren had made regarding biopsies from people who suffered from gastritis and ulcer disease. He often noticed bacteria he couldn’t quite identify on the slides from ulcer biopsies. These bacteria (see figure at the top) had a twisted, helical shape, like those of the genus Campylobacter, but didn’t really conform exactly to any known bacteria. They had been observed by other pathologists, but no one had taken it any further, or remarked on its possible medical significance. Drs. Warren and Marshall began to wonder whether these unusual bacteria weren’t involved in the occurrence of the ulcer itself.

The way to push that idea further was to grow the bugs in vitro, in a Petri dish. Nobody had managed to do this. A fortuitous accident happened on the Easter long weekend of 1982. Due to the pressure of other work and the long holiday weekend, a culture dish containing the unusual gastric bacteria was left in the incubator much longer than usual in the clinical microbiology service lab. When examined on Tuesday after the long weekend, it contained small colonies of the new bacteria isolated from the ulcer patients. The ability to grow out the unusual bacteria provided the material for real science to begin.

In the Spring of 1983 Marshall and Warren had found their novel bacteria in 100 patients who suffered from either gastritis or ulcers. They submitted a letter to the prestigious medical journal The Lancet, and also an abstract to the Gastroenterological Society of Australia. The secretary of that society informed them that he regretted that “. . . your research paper was not accepted for presentation on the programme… The number of abstracts we receive continues to increase and for this meeting 67 were submitted and we were able to accept 56.” It must have been disappointing to young Dr. Marshall to be told that his paper was among the 11 least interesting ones submitted. And so the Society of Australia missed the chance to become the first venue to feature what has subsequently been called the most important discovery in gastroenterology of the 20thcentury.

The editors of The Lancet were also somewhat skeptical, but they agreed, after some to and fro, to publish their paper. A second paper, in 1984, found the editor of The Lancet in a more receptive mood. But it was a little difficult to find reviewers who agreed with his assessment of the importance of the work. Once that hurdle was cleared and the paper was published, the editor wrote a commentary about it, an unusual acknowledgement of its importance. The hypothesis was, that ulcers might really be due to infection by the newly-described helical bacteria.

From the lab bench to the bedside

Despite these publications, general acceptance of the idea that an infectious agent was important in ulcers was slow. The usual course of clinical trials would have required that Marshall and Warren first compared their observations of the bacteria in ulcer patient biopsies with the background of infection in the population at large. Today we know that many people who have no symptoms of gastritis or ulcer disease have these bacteria in their gut, so their presence alone does not cause ulcers. Around 25% of adults have H. pylori in their gut, far more than the number of patients with ulcer disease. Secondly, Drs. Warren and Marshall would have had to carry out large-scale trials with antibiotics to prove that these protocols worked better than existing treatments, which did usually alleviate the symptoms, and sometimes also got rid of the bacteria (bismuth, which is present in some medications, kills the bacteria).

Frustrated, the young and somewhat impetuous Dr. Marshall decided to take matters into his own hands; he went to his gut reaction. He wanted to see whether the bacteria, which were given the name Helicobacter pylori (H. pylori in the usual shorthand), caused gastritis, a precondition for ulcer disease. After establishing by biopsy that he didn’t have H. pylori in his gut already, he drank a culture containing a billion of them and waited. Nothing for 5 days, then he developed the symptoms of gastritis: halitosis (very bad breath), morning nausea, and vomiting. A second biopsy showed that H. pylori were now fluorishing in his gut. Antibiotics cured him by killing the bacteria (he knew that they would before he took the nasty drink), but this reckless self experimental turned the corner for the story, and its publication in the Medical Journal of Australia in 1985 made the world sit up and take notice. In a short time, compared to the usual path from discovery to treatment, this led to the development of combined treatments for ulcers, using both antibiotics that killed H. pylori and inhibitors that blocked acid production. This approach has revolutionized the treatment of gastric and peptic ulcers.

The happy conclusion of all this is that sometimes you get lucky in aggressively pushing forward in unconventional ways. And also, that sometimes a radical idea needs a fearless champion. A conventional epidemiological study would have shown that most people with H. pylori do not have ulcers, so there wasn’t much reason to look at this idea further. But that step was bypassed by Dr. Marshall’s impatient self experimentation, and the pace of clinical trials was expedited. As a result of the unconventional outcome of this radical approach, the World Health Organisation agreed, in the early 1990s, that antibiotic treatment was the right way to treat ulcer disease. And in 2005, Barry J. Marshall and J. Robin Warren were awarded jointly the Nobel Prize for Physiology or Medicine, where the story of how it all happened is written.

Go to Latest Posts